In practical terms latency is the time between a user action and the response from the website or application to this action for instance the delay between when a user clicks a link to a webpage and when the browser displays that webpage. The increased latency is not related to the wireless network.

Bandwidth has to do with how wide or narrow a pipe is.

What does latency mean in wifi. Latency is measured in milliseconds and is an indicator of the time it takes between requesting information on the internet and when it arrives. Network connections in which small delays occur are called low-latency networks whereas network connections which suffers from long delays are called high-latency networks. In computer networking latency is an expression of how much time it takes for a data packet to travel from one designated point to another.

In terms of video streaming end-to-end latency is the total delay or total time elapsed between when a frame is captured on a transmitting device and the exact instant when it is displayed on the receiving device. Because its a measure of time delay you want your latency to be as low as possible. The higher the number the more delay you experience.

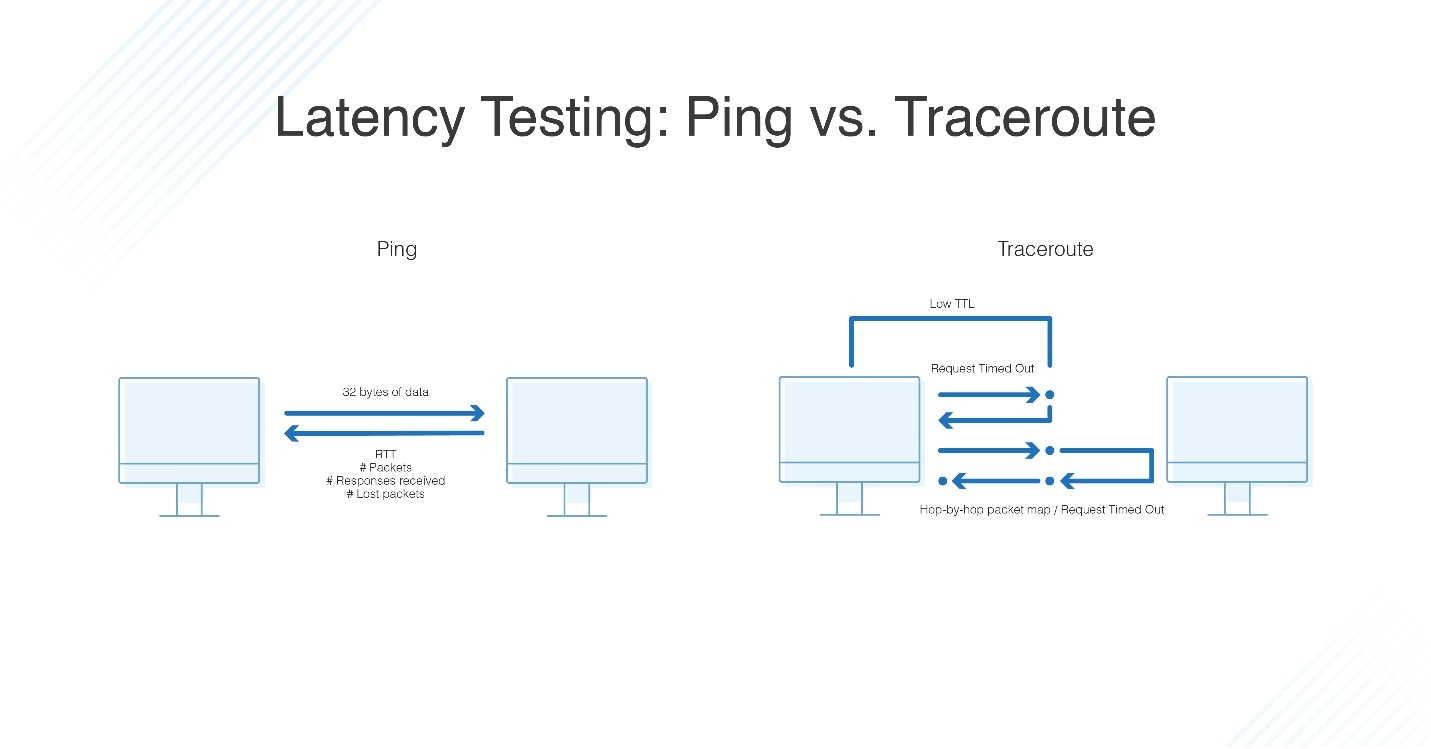

To test this your computer sends a ping of information to a remote server and measures how long it takes for the signal to come back. High latency creates bottlenecks in any network communication. In simpler terms DNS latency is the amount of time data can reach a particular destination and come back.

If your website has a high latency rate it can create frustration in users who may choose a different source for information or products. While all computer networks inherently possess some form of latency the amount varies and can suddenly increase for various reasons. Latency is the secret enemy of the broadband user producing internet that downloads huge files at lightning speed and yet navigates websites at a crawl.

The latency on a WiFi will completely dependent on the equipment being used and the load on that equipment. Ironically bandwidth isnt despite the fact that everyone refers to bandwidth as speed. Why does superfast internet sometimes seem so slow.

Latency measures the round trip the time it takes to send data and receive a response. Bandwidth measures how much data your internet connection can. The latency of a network connection represents the amount of time required for data to travel between the sender and receiver.

More seriously though the best way to explain the difference is like this using a pipe as an example. Ideally latency will be as close to zero as possible. The lower the latency the better it is for you.

People perceive these unexpected time delays as lag. These terms all describe different aspects of the performance of a broadband connection and its important to understand how they relate to each other because fast broadband does not always mean low latency. However if the latency is low and the bandwidth is high that will allow for greater throughput and a more efficient connection.

Latency refers to how much time it takes for a signal to travel to its destination and back. Network latency refers specifically to delays that take place within a network or on the Internet. However the time the WiFi access pointrouter takes to process the data to send it on is included in your latency calculation.

Most users today are on WiFi and when they have poor experience on their devices one of the first questions we need to answer is whether or not WiFi is the culprit. If the latency in a pipe is low and the bandwidth is also low that means that the throughput will be inherently low. What is Zero Latency.

And its up to the packet of information on how fast it will go from one point to another point. The primary cause of why latency happens is physics. How can high-quality video stream flawlessly while a Skype conversation is hampered by delays and freezes.

Since RF travels at the speed of light the propagation latency for the normal WiFi range is under a millisecond. Network latency can be measured by determining the round-trip time RTT for a packet of data to travel to a destination and back again. Latency is typically expressed in terms of time most commonly in milliseconds or seconds.

Well for one thing latency is a way to measure speed. Latency is a measure of how much time it takes for your computer to send signals to a server and then receive a response back. Network latency is the term used to indicate any kind of delay that happens in data communication over a network.